AI绘画分享

目前市面上,大模型技术和AI绘画都已经爆红了一圈,为了满足各位跟着我学习朋友的要求,本文特意分享一篇如何用大模型技术实现AI绘画。

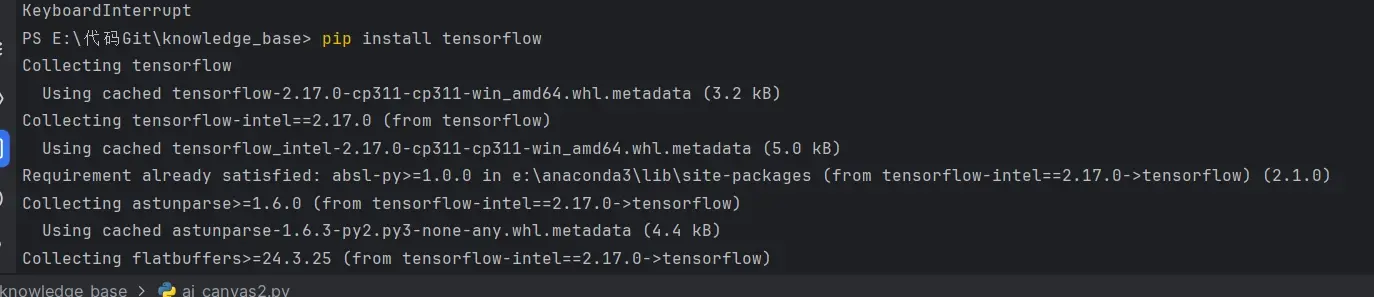

本文采用的大模型框架是TensorFlow,结合keras来实现,所以您首先需要安装相关Python软件包。

一、 安装所需要的依赖

在PyCharm工具命令终端输入以下命令:

pip install tensorflow

pip install matplotlib

pip install numpy scikit-learn

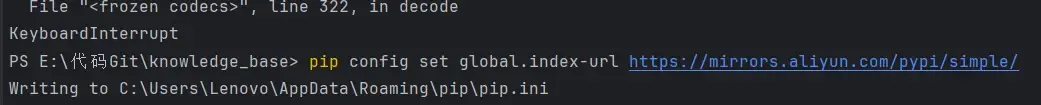

如果出现安装缓慢情况,还需要设置Python环境源,建议使用阿里云镜像云:

pip config set global.index-url https://mirrors.aliyun.com/pypi/simple/

当然,你还可以更换为清华大学镜像源:

pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple/

设置完镜像源后,则安装速度飞快,每秒10M:

设置镜像源:

设置完成后安装速度:

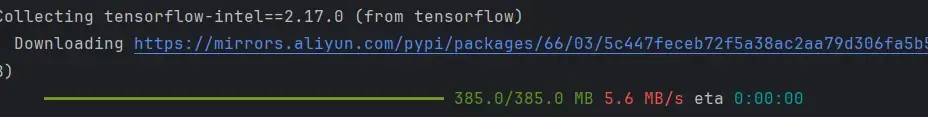

二、 编写所需代码

博主初步编写代码为:

(1)导入所需包:

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Dense, Reshape, Flatten, Conv2D, Conv2DTranspose, BatchNormalization, LeakyReLU

from tensorflow.keras.optimizers import Adam

import numpy as np

import matplotlib.pyplot as plt

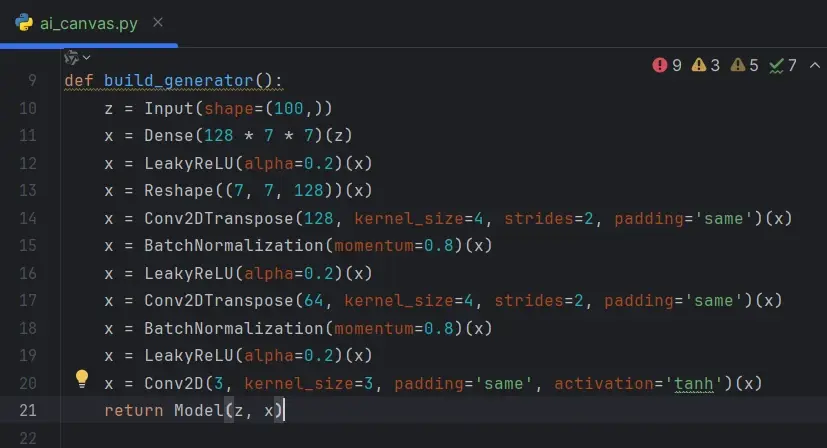

(2)定义生成器模型:

def build_generator():

z = Input(shape=(100,))

x = Dense(128 * 7 * 7)(z)

x = LeakyReLU(alpha=0.2)(x)

x = Reshape((7, 7, 128))(x)

x = Conv2DTranspose(128, kernel_size=4, strides=2, padding='same')(x)

x = BatchNormalization(momentum=0.8)(x)

x = LeakyReLU(alpha=0.2)(x)

x = Conv2DTranspose(64, kernel_size=4, strides=2, padding='same')(x)

x = BatchNormalization(momentum=0.8)(x)

x = LeakyReLU(alpha=0.2)(x)

x = Conv2D(3, kernel_size=3, padding='same', activation='tanh')(x)

return Model(z, x)

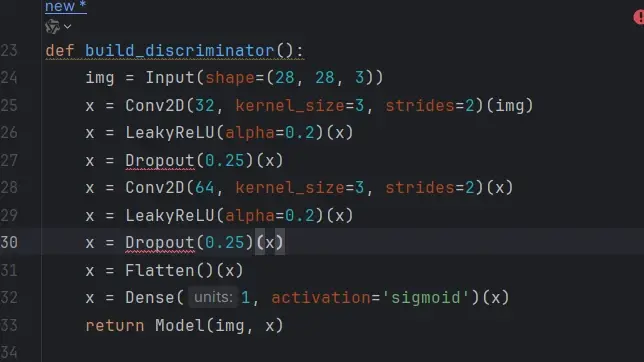

(3)定义判别器模型

def build_discriminator():

img = Input(shape=(28, 28, 3))

x = Conv2D(32, kernel_size=3, strides=2)(img)

x = LeakyReLU(alpha=0.2)(x)

x = Dropout(0.25)(x)

x = Conv2D(64, kernel_size=3, strides=2)(x)

x = LeakyReLU(alpha=0.2)(x)

x = Dropout(0.25)(x)

x = Flatten()(x)

x = Dense(1, activation='sigmoid')(x)

return Model(img, x)

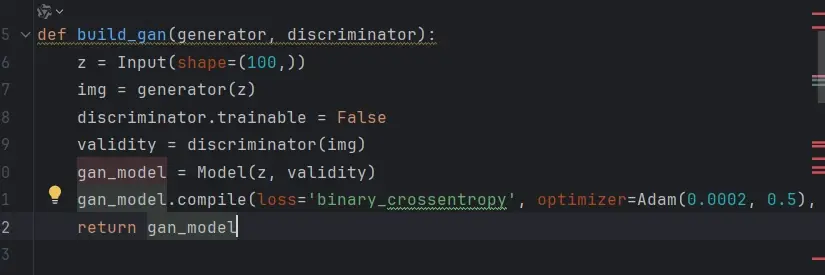

(4)构建并编译 GAN 模型

def build_gan(generator, discriminator):

z = Input(shape=(100,))

img = generator(z)

discriminator.trainable = False

validity = discriminator(img)

gan_model = Model(z, validity)

gan_model.compile(loss='binary_crossentropy', optimizer=Adam(0.0002, 0.5), metrics=['accuracy'])

return gan_model

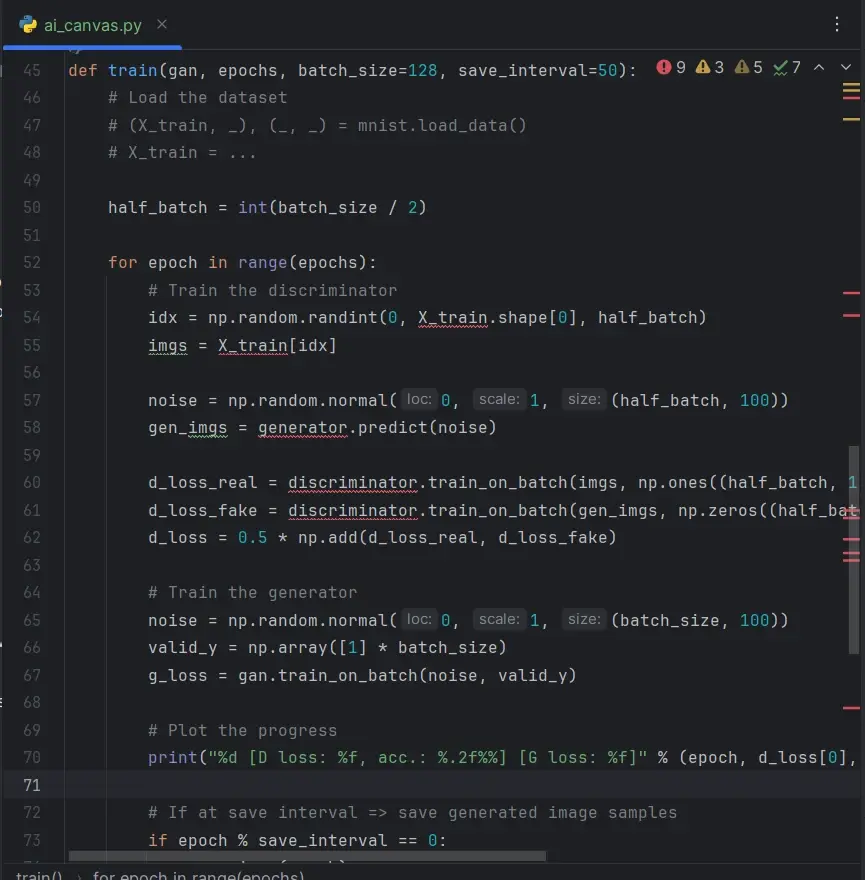

(5)训练模型

def train(gan, epochs, batch_size=128, save_interval=50):

# Load the dataset

# (X_train, _), (_, _) = mnist.load_data()

# X_train = ...

half_batch = int(batch_size / 2)

for epoch in range(epochs):

# Train the discriminator

idx = np.random.randint(0, X_train.shape[0], half_batch)

imgs = X_train[idx]

noise = np.random.normal(0, 1, (half_batch, 100))

gen_imgs = generator.predict(noise)

d_loss_real = discriminator.train_on_batch(imgs, np.ones((half_batch, 1)))

d_loss_fake = discriminator.train_on_batch(gen_imgs, np.zeros((half_batch, 1)))

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

# Train the generator

noise = np.random.normal(0, 1, (batch_size, 100))

valid_y = np.array([1] * batch_size)

g_loss = gan.train_on_batch(noise, valid_y)

# Plot the progress

print("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100 * d_loss[1], g_loss))

# If at save interval => save generated image samples

if epoch % save_interval == 0:

save_imgs(epoch)

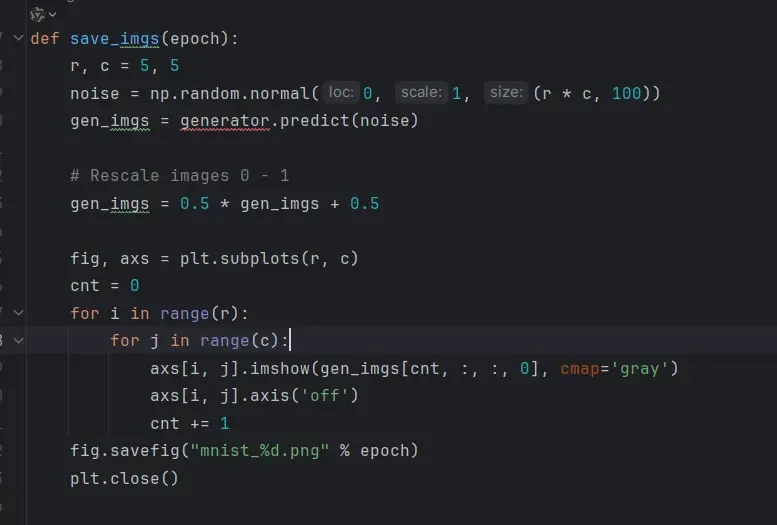

(6)保存生成图片

def save_imgs(epoch):

r, c = 5, 5

noise = np.random.normal(0, 1, (r * c, 100))

gen_imgs = generator.predict(noise)

# Rescale images 0 - 1

gen_imgs = 0.5 * gen_imgs + 0.5

fig, axs = plt.subplots(r, c)

cnt = 0

for i in range(r):

for j in range(c):

axs[i, j].imshow(gen_imgs[cnt, :, :, 0], cmap='gray')

axs[i, j].axis('off')

cnt += 1

fig.savefig("mnist_%d.png" % epoch)

plt.close()

程序并未main函数入口,需要做调整,而且运行过程中出现以下错误:

d_loss_real = discriminator.train_on_batch(imgs, np.ones((half_batch, 1))):line 1001, in _assert_compile_called raise ValueError(msg) ValueError: You must call compile() before using the model.

解决方案:

问题的缘由是在使用 discriminator.train_on_batch 之前没有调用 compile 方法,需要确保判别器在使用前已经被编译。

# 编译判别器

discriminator.compile(loss='binary_crossentropy', optimizer=Adam(0.0002, 0.5), metrics=['accuracy'])

TypeError: must be real number, not list

解决方案:

这个错误提示表明在打印 d_loss 和 g_loss 时,它们仍然是列表而不是单个数值。我们需要从列表中提取正确的数值。

经过调整后的完成代码:

import numpy as np

import tensorflow as tf

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Dense, Reshape, Flatten, Conv2D, Conv2DTranspose, BatchNormalization, \

LeakyReLU, Dropout

from tensorflow.keras.optimizers import Adam

import matplotlib.pyplot as plt

# 构建生成器模型

def build_generator():

z = Input(shape=(100,))

x = Dense(128 * 7 * 7)(z)

x = LeakyReLU(alpha=0.2)(x)

x = Reshape((7, 7, 128))(x)

x = Conv2DTranspose(128, kernel_size=4, strides=2, padding='same')(x)

x = BatchNormalization(momentum=0.8)(x)

x = LeakyReLU(alpha=0.2)(x)

x = Conv2DTranspose(64, kernel_size=4, strides=2, padding='same')(x)

x = BatchNormalization(momentum=0.8)(x)

x = LeakyReLU(alpha=0.2)(x)

x = Conv2D(1, kernel_size=3, padding='same', activation='tanh')(x) # 生成单通道图像

return Model(z, x)

# 构建判别器模型

def build_discriminator():

img = Input(shape=(28, 28, 1)) # 输入单通道图像

x = Conv2D(32, kernel_size=3, strides=2)(img)

x = LeakyReLU(alpha=0.2)(x)

x = Dropout(0.25)(x)

x = Conv2D(64, kernel_size=3, strides=2)(x)

x = LeakyReLU(alpha=0.2)(x)

x = Dropout(0.25)(x)

x = Flatten()(x)

x = Dense(1, activation='sigmoid')(x)

return Model(img, x)

# 构建并编译 GAN 模型

def build_gan(generator, discriminator):

z = Input(shape=(100,))

img = generator(z)

discriminator.trainable = False

validity = discriminator(img)

gan_model = Model(z, validity)

gan_model.compile(loss='binary_crossentropy', optimizer=Adam(0.0002, 0.5), metrics=['accuracy'])

return gan_model

# 训练模型

def train(gan, epochs, batch_size=128, save_interval=50):

# 加载数据集

(X_train, _), (_, _) = tf.keras.datasets.mnist.load_data()

X_train = X_train / 127.5 - 1. # 归一化到 [-1, 1]

X_train = np.expand_dims(X_train, axis=3) # 转换为单通道图像

half_batch = int(batch_size / 2)

for epoch in range(epochs):

# 训练判别器

idx = np.random.randint(0, X_train.shape[0], half_batch)

imgs = X_train[idx]

noise = np.random.normal(0, 1, (half_batch, 100))

gen_imgs = generator.predict(noise)

d_loss_real = discriminator.train_on_batch(imgs, np.ones((half_batch, 1)))

d_loss_fake = discriminator.train_on_batch(gen_imgs, np.zeros((half_batch, 1)))

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

# 训练生成器

noise = np.random.normal(0, 1, (batch_size, 100))

valid_y = np.array([1] * batch_size)

g_loss = gan.train_on_batch(noise, valid_y)

# 输出训练进度

print("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100 * d_loss[1], g_loss[0]))

# 如果达到保存间隔,保存生成的图像样本

if epoch % save_interval == 0:

save_imgs(epoch)

# 保存生成的图像

def save_imgs(epoch):

r, c = 5, 5

noise = np.random.normal(0, 1, (r * c, 100))

gen_imgs = generator.predict(noise)

# 将图像缩放回 [0, 1] 范围

gen_imgs = 0.5 * gen_imgs + 0.5

fig, axs = plt.subplots(r, c)

cnt = 0

for i in range(r):

for j in range(c):

axs[i, j].imshow(gen_imgs[cnt, :, :, 0], cmap='gray')

axs[i, j].axis('off')

cnt += 1

fig.savefig("mnist_%d.png" % epoch)

plt.close()

# 主程序入口

if __name__ == '__main__':

# 构建生成器和判别器

generator = build_generator()

discriminator = build_discriminator()

# 编译判别器

discriminator.compile(loss='binary_crossentropy', optimizer=Adam(0.0002, 0.5), metrics=['accuracy'])

# 构建并编译 GAN 模型

gan = build_gan(generator, discriminator)

# 训练模型

train(gan, epochs=10000, batch_size=32, save_interval=200)

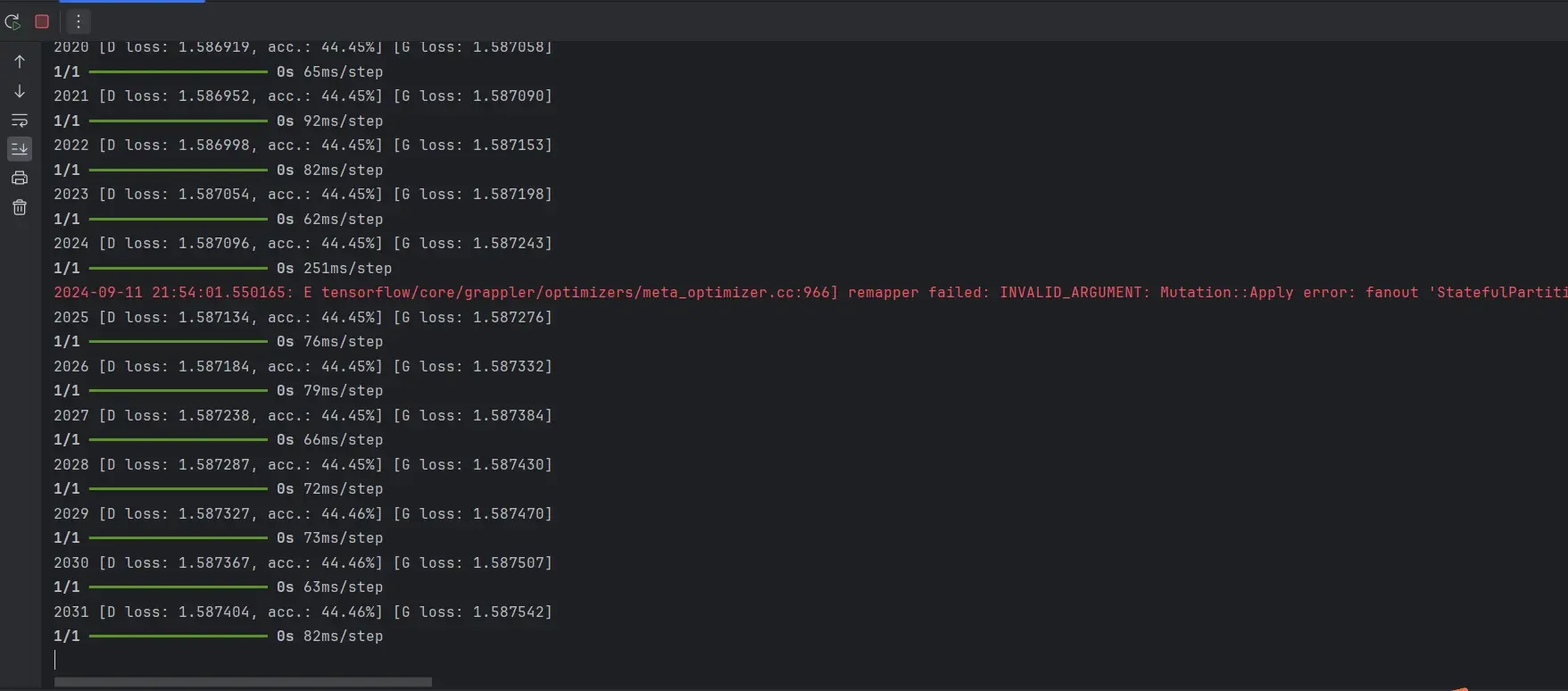

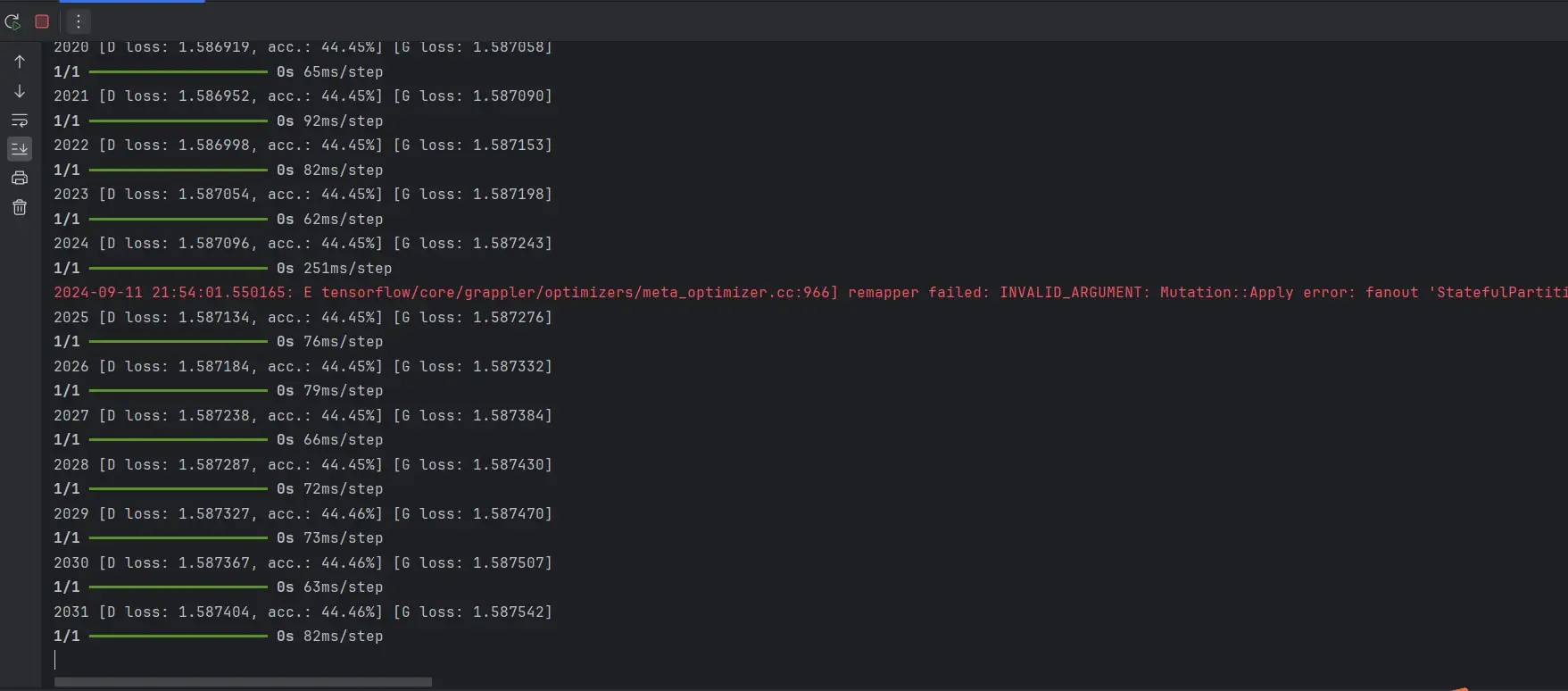

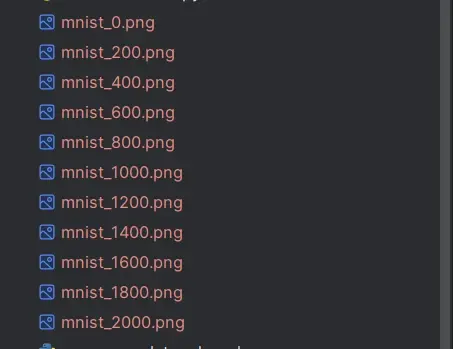

模型训练10000轮,保存间隔是200轮一次,每运行200轮,保存一张图片:

右键程序运行,看到控制台以下结果:

不断训练,并打印损失精度和准确值,以及损耗,查看当前项目目录,已经陆续生成图片:

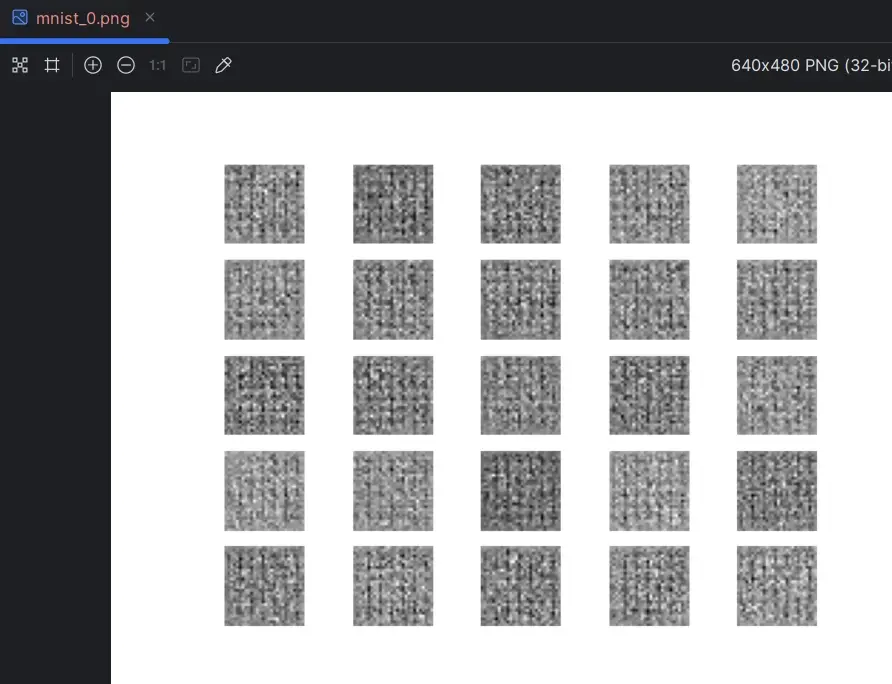

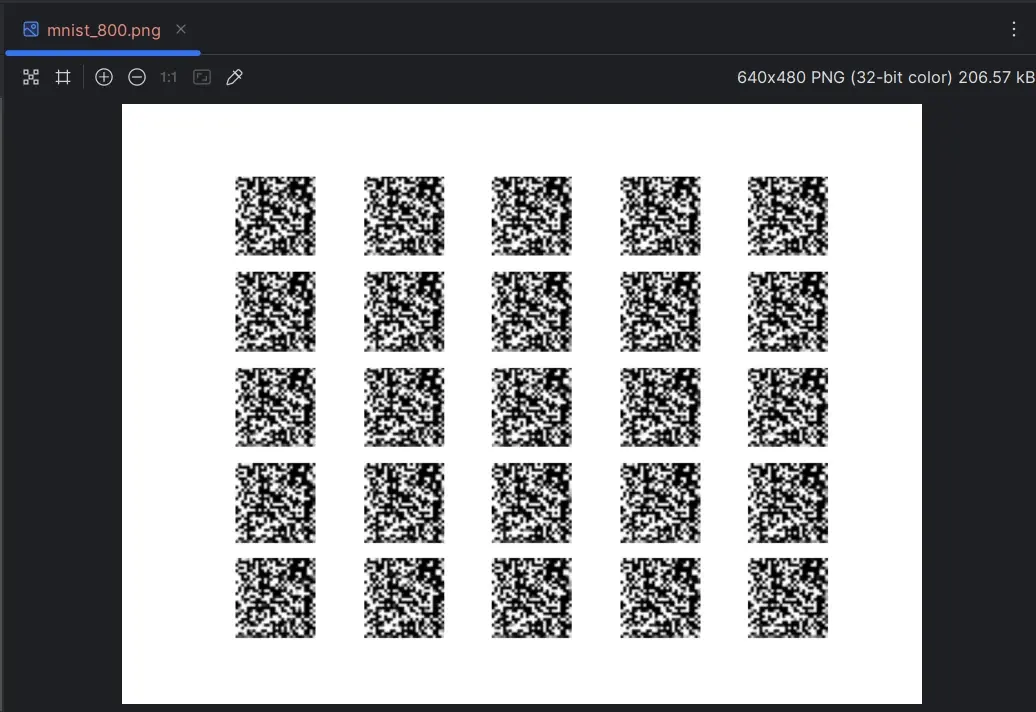

点开图片:

mnist_0.png

mnist_800.png

生成形式各异的图片,可以进一步调整训练参数,定制属于自己的AI绘画体系。

本文分享了用大模型技术帮我实现AI绘画的方法,详细AI技术学习可以关注博主。